The scientific revolution began about 400 years ago, with Galileo. Although some would argue that we should place its beginning a bit earlier, with Copernicus, science really got going in earnest with Galileo and his contemporaries, Rene Descartes and Francis Bacon.

These pioneers introduced something truly radical at the time – naturalism. Naturalism is the notion that the processes of nature can be understood without recourse to supernatural causes. If something has a supernatural cause, it can never be understood by humans, by definition. But if it has a natural cause, we can find it, at least in principle.

More particularly, supernatural causes don’t operate the way natural causes do. Miracles are miracles. End of story. There is nothing more to explain, nothing more to understand. The human mind readily grasps the concept of natural causes, even if they are complex. If someone gets drunk, falls off a cliff, and dies, we can ask, “What caused his death?” Well, there’s a proximate cause. His body was crushed when he hit the bottom. But there’s also an ultimate cause. He was inebriated. We might even go back further and ask, “What caused him to be drunk? Did he have personal problems? Maybe he suffered some injustice. Maybe it was the fault of someone who gave him the drink.” But all of this causality, with all of its complexities, is completely understandable to us. These are natural causes. Not supernatural. They can be understood the same way a machine is understood. A and B cause C, which in turn causes D, and so on.

If I ask you, “Why is your house cool, even though it’s hot outside?” you might not be able to give me a detailed explanation. But SOMEONE could. No reasonable person would invoke a supernatural force to explain this. To get into the details, they would have to understand thermal insulation, electrical power, refrigeration, thermostats, and so on. But no one doubts that there is a natural, causal chain at work. Again, nothing supernatural.

Philosophers often use the word machine, or mechanism, to describe such a system. Unfortunately, both words are used colloquially to describe specific, man-made systems. In philosophy, a machine is simply anything that doesn’t require supernatural forces to operate. In this sense, a school of fish is a machine. The climate system is a machine. The solar system is a machine. These processes involve natural, understandable processes. Nothing miraculous is necessary.

For the first 200 years of its existence, science accepted that living things were fundamentally different in their makeup from nonliving matter – that there was some vital force that gives life – well, life. The Prussian scientist Carl Reichenbach speculated that in addition to electricity, magnetism, heat, and gravity, there was some “life force” – he called it the Odic force. It is understandable that early scientists believed that life was fundamentally different from non-life – after all, living things do all kinds of things that we don’t associate with non-living matter. They reproduce themselves, they respond to stimuli, they consume resources, they compete with each other.

The notion that living things are fundamentally distinct from non-living ones is called vitalism. The origins of vitalism were unquestionably tied to belief in the supernatural. The word pneumatic comes from the ancient Greek word pneuma for breath – the pneumatics among the ancient Greeks believed that the air itself contained the life essence. Aristotle believed that there was a “connate pneuma,” an “air” within the sperm that contained the soul. Although the ancient Greeks distinguished between the breath and the soul, these concepts were inevitably blended together over time. In the King James Book of John, the very same Greek word pneuma is translated as both “wind” and “spirit.”

But it was not only human beings that were believed to contain a supernatural element. For millennia, it was quite generally believed that all life required a supernatural force to sustain it. It was not enough for living things to be “created” in some ultimate sense. After all, rocks had also been created – yet they didn’t eat, reproduce, respond to stimuli, or compete with each other. Living things, it was thought, had to be actively sustained by a supernatural life force.

As the science of chemistry developed, and scientists began to realize how complex the chemistry of life is, they thought they had hit upon the answer. The magic, they thought, was in the chemistry. They speculated that it would never be possible to create the complex chemicals of life from simple, inorganic molecules. But in the 19th century, one by one, complex organic chemicals began to be synthesized. The vitalists clung to their beliefs for decades, but eventually it became painfully obvious that there was no “break point” – the complex chemistry of life was different only in its degree of complexity from the simple chemistry of non-living matter. The one transitioned smoothly to the other.

It became equally clear that the bodies of living organisms were machines – which is to say, that they were different only in complexity from man-made machines. Their organs were machines, highly intricate of course, but then the organelles of individual cells are highly intricate too. Today cell lines, even human cell lines, are routinely grown in the laboratory. No serious, educated person believes that these cell lines have supernatural qualities. The organs of the human body are machines. The liver is a machine. The lungs are machines. The heart is a machine. Everything these organs do can be explained without recourse to “vital forces,” or supernatural causes, any more than we need such explanations for the opening of a flower, or the flight of a bird. There’s nothing left for vitalism to do there.

And then there’s the brain. The human brain is the most complex piece of the universe we know of. The human brain is the last refuge of vitalism. There’s no other place for it to go. If there is any “vital force,” it must reside in the brain. Of course, the idea is not a new one. But it’s important to realize that for millennia, people believed that EVERYTHING living was sustained by a supernatural force. Eventually a sharp demarcation was made between humans and everything else. And finally, between the brain and everything else.

In the late 20th century came the digital computer. By this time of course, science had come to the view that what really distinguished human beings from animals was not desire, fear, or other such basic emotions. Reason. Analysis. Foresight. These were the “higher functions” of the human brain, the things that human beings are very good at. Animals merely responded to stimuli. Humans made plans and solved complex problems. Animals were merely complex machines. But the human brain? That was different. Unfortunately for this viewpoint, logic turned out to be, if you’ll pardon the pun, a no-brainer. It is simply a matter of creating a system that can represent simple alternatives.

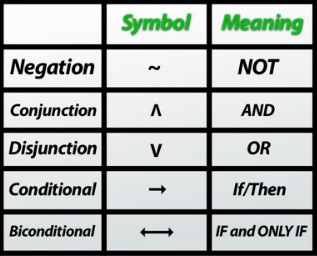

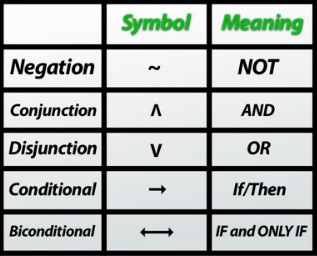

An electrical switch is more than just a way of turning power on and off. It provides us with a way of REPRESENTING something. When the switch is off, we can call that a 0. When it’s on, we can call that a 1. Behind this seemingly mundane fact lies enormous power. For one thing, any number can be represented by a series of zeros and/or ones. And then there’s logic itself.

Here’s a simple logical sequence, an example of what logicians call a logical connective. If A is false or B is false, C is false. But if both are true, C is true. This simple logic can be represented by a logic gate containing 2 switches, called an AND gate. Only if both switches are on will the output be on. Other basic logic gates can be constructed from small numbers of switches – OR gates, NOR gates, NAND gates. Put a lot of such gates together, and you have a powerful logic circuit.

Such a circuit doesn’t just perform an operation. A tractor can be used to plow a field. A refrigerator can keep your food cold. But a logic circuit can be used to REPRESENT. The whole symbolic world of categories and relationships opens up. Therein lies the power of digital computers. The result is reasoning. Analysis. Prediction. This is why a computer program like Watson can be a Jeopardy! champion. Far from being the exclusive realm of “higher” brain function, logic and reason turn out to be relatively easy for computers to perform – it is merely a matter of enough computer power and the right software. It is the “animalistic” emotions, such as lust and fear that turn out to be tricky – but no one argues that these are unique to human beings.

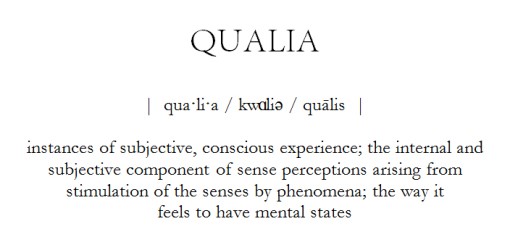

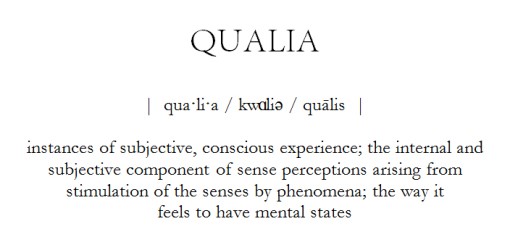

What’s left? Consciousness. Consciousness is the last big mystery. What is it, exactly? We still don’t really know. Some argue that it’s experience – something that takes mere sensations and translates them into qualities. The color red, for example, or the smell of banana. Others argue that it’s self-awareness. A cat may chase its own tail, but a person never would, because a person has a clear sense of self.

So the question becomes, is consciousness the result of a machine at work? Or is it something beyond the capability of any machine, no matter how complex or sophisticated? Is there a spark of vitalism in the human brain that produces what we call consciousness?

It’s important to realize that people who make their living examining such questions do not have the luxury of ignorance. A cognitive scientist cannot close his mind to the mountain of evidence on the subject. His job requires him to be aware of the facts, however uncomfortable they may be. He cannot stand up in front of his colleagues and say, “I’m gonna pretend that neuroscience doesn’t exist, and just go with what I feel comfortable with.”

People who are knowledgeable about such things, neuroscientists, cognitive scientists, philosophers of the mind, and so on, generally agree that consciousness, in the end, will yield to naturalistic explanations. They can see the mysteries of human experience and thought yielding one by one to the methods of science. Much as the mystery of the opening of a flower once yielded to naturalistic explanations, the seeming intangibles of the human mind are doing the same. Attention for example.

At this moment, your attention is focused on these words (hopefully). Your eyes and ears are receiving lots of other inputs. But your mind is filtering. If you shift your attention, you might become aware of a motor humming, or a bird singing, or a song in your head. When people dream, they’re usually not aware that they’re dreaming. But such awareness can happen – that’s called a lucid dream. What is going on there? The whole phenomenon seems very intangible and mysterious. Our language even suggests that attention is somehow tied to the core of our being – when someone is talking to me and I seem distracted, they might say, “You’re not here.”

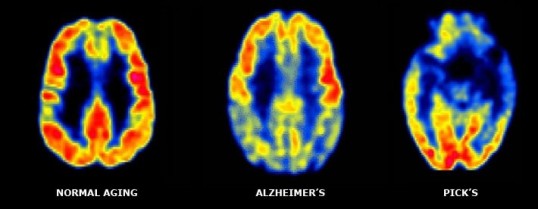

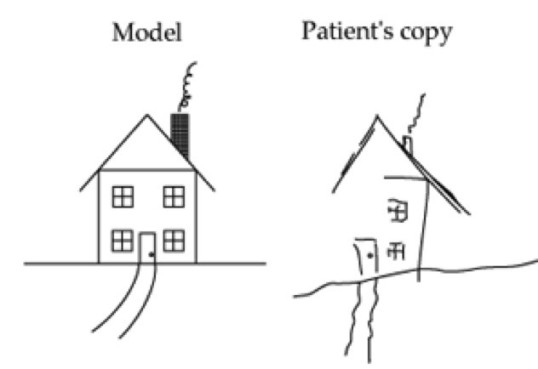

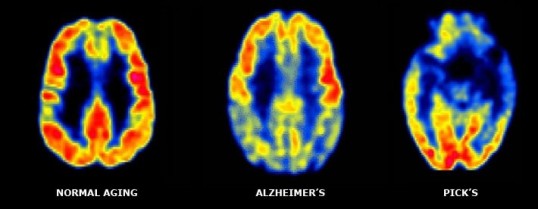

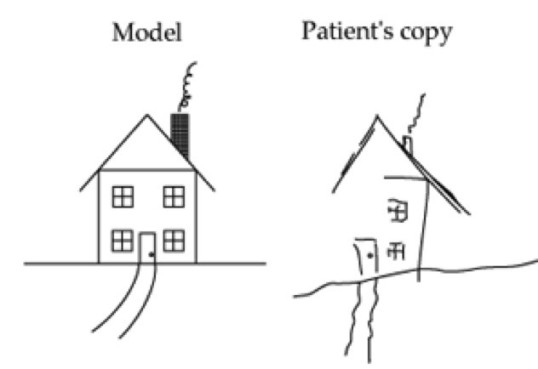

But science is showing us that attention is not so mysterious. There is the phenomenon of hemispatial neglect, caused by damage to one side of the brain. The person can see just fine, but they cannot PROCESS one side of their visual field. They are not AWARE of it. It is not a deficit in vision per se. A person who has hemispatial neglect affecting his left side field of view may have nothing wrong with vision in either eye. In fact, some people with hemispatial neglect can see every object in a room, yet can only perceive one side of each object! Studies have shown that people with hemispatial neglect can even have difficulty with one side of their field of view in DREAMS – this is revealed by recording their eye movements during REM (dream) sleep.

Then there is prosopagnosia, also called “face blindness.” Again this is caused by specific types of brain damage. The person is quite capable of seeing faces, and drawing faces, but does not RECOGNIZE people by their faces. The person may even be unable to recognize his own face. It could be argued that this is not an attention problem, but an interpretation problem. Either way, it is certainly not a vision problem.

And then there’s sound. How many times have you been focused on something while someone was talking to you, only to have their words register in your awareness many seconds later? Selective auditory attention is something all of us do, all the time. We filter out much of our soundscape and focus on particular sounds. Our limitations make for some interesting results. When giving a lecture, one must focus on a number of things at once – the structure of the presentation, the behavior of the audience, and so on. The need to multitask means that it is difficult to focus on HEARING ONESELF, and as a result, a mistake is often made without realizing it. On more than one occasion I have made a mistake in a lecture which I only became aware of many seconds after I made it. This illustrates that auditory attention has a lot to do with memory – with the virtual world your mind is constantly constructing, from the bits of sensory information it is constantly filtering and storing in memory.

Other mental phenomena, such as judgment, rules of thumb, decision making, language comprehension, and intuition, turn out to be not so mysterious either. So-called “commonsense reasoning” is one of the more interesting aspects of human thought that is yielding to science. This refers to the ability to cope with typical life situations by understanding the properties and relationships human beings deal with routinely. For example, human beings generally understand things like, “A robin is a bird. Robins can fly. Most birds can fly. But not everything that flies is a bird. A bumblebee can fly too. It can also sting. But not everything that stings can fly. And not everything that flies can sting.” And so on. Our commonsense reasoning depends on a multitude of such “understandings” about the world, and there are aspects of it that we still don’t fully understand. But the mystery of it is, year by year, giving way to understanding. And anything that we can understand is, by definition, not supernatural.

What about emotions? Fear, love, ambition, pleasure, disgust? As I said, many animals clearly experience emotions, so there’s nothing uniquely human about many of them. We now know that the brain’s limbic system, not the cerebrum, is where a person’s emotional life is largely housed. One particular part of the limbic system for example, the amygdala, is clearly the focus of the emotion of fear. Direct stimulation will force the person to feel intense anxiety, and damage to the amygdala can reduce fear. In one famous case, a woman often referred to as SM-046, the amydala is completely destroyed. This woman lives in a dangerous neighborhood and has been held up at both knifepoint and gunpoint. She was almost killed in a domestic violence assault. Yet she never showed any sign of fear in these situations.

We think of pleasure as an emotion, which of course it is, but we tend to think of pain as a physiological response. Yet we know that both of these are largely housed in the limbic system, not the cerebrum. Pleasure, in fact, is the one that seems more localized – intense feelings of pleasure can be produced by direct stimulation of the lateral hypothalamus, in the limbic system. This very same area of the brain, when stimulated directly, produces intense pleasure in rats, just the same as it does in humans. There is nothing supernatural or uniquely human about it. We humans merely interpret the feelings in much more elaborate and complex ways, thanks to our large brains with their ability to abstract.

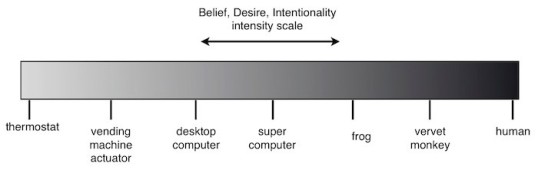

A beetle, like most animals, is attracted to certain things and avoids others. Does it feel emotions? A robot can be programmed to move toward certain things and avoid others. Does it EXPERIENCE emotions? This question leads us to one of the most controversial topics in the philosophy of mind – what is experience? Is it something independent of function? And how is it related to consciousness?

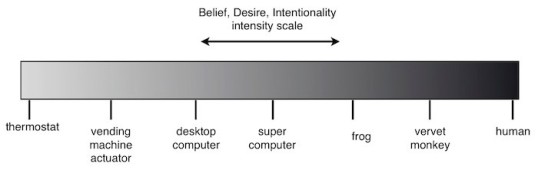

Does an insect have experiences? How about a fish? A lizard? A rat? A gorilla? Few people who have spent much time with gorillas would deny that they have experiences. Few dog-owners would deny that dogs have experiences. The trouble is, there is no “break point,” no clear demarcation in the animal world that we can point to, between an animal that has experiences and one that doesn’t. Experience, like emotion, doesn’t seem to be uniquely human. That doesn’t mean every goal-seeking system has experiences. A thermostat is a goal-seeking system. It probably doesn’t have experiences.

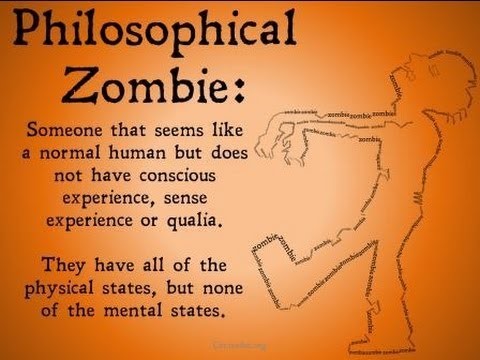

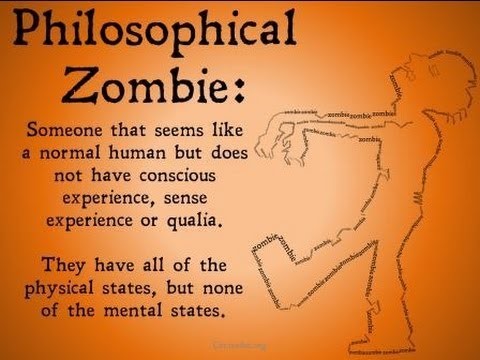

Many philosophers argue that experience is critical to consciousness. They believe it is possible for a system to do everything a human being does – yet have no experiences, and therefore no consciousness. Such a system is called a philosophical zombie. They distinguish between FUNCTION and EXPERIENCE. Others believe that any system that behaves the way a human being behaves must have experience and consciousness – that philosophical zombies are an impossibility in real life. The debate rages on.

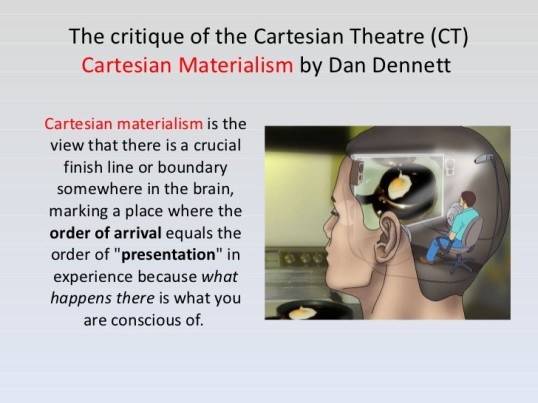

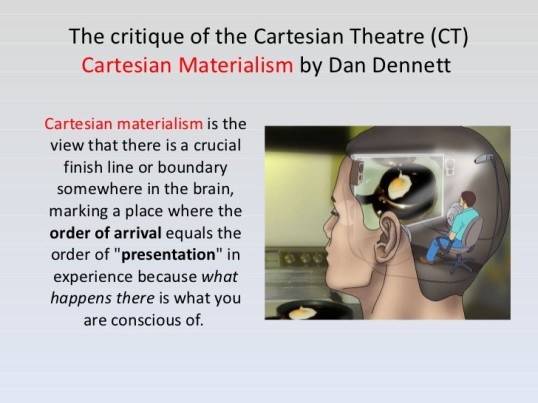

Daniel Dennett is a brilliant philosopher. He has argued that our notions of experience tend to be skewed because of Rene Descartes. Descartes suggested that there is a kind of inner observer taking in the world in the way we would take in a play in a theater, and this idea has stuck. The Cartesian theater seems reasonable – that human experience is like a play, or a movie, in one’s head. That there is a little observer taking it all in, distinct from the play itself – the core of the person. Their soul.

The problem with this idea, as Dennett points out, is that we have plenty of evidence that the so-called play is constantly being changed, even as it proceeds. It’s more like a bunch of plays happening at once, different interpretations of a basic script. All of them are processed by the brain at some level. But what is processed is only part of the story. And awareness is far from straightforward.

Consider dreams. Usually, when we dream, we don’t realize we’re dreaming. We often respond to what we’re “experiencing” in the dream. But we also accept ridiculous things in our dreams that we would normally dismiss. We don’t normally walk out of one house and suddenly appear in another house miles away. It’s almost as if we ARE zombies – responding to these happenings, but not really “all there.” Yet some part of “us” is there – isn’t it?

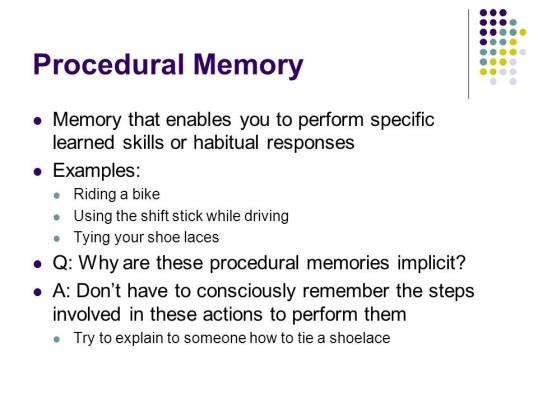

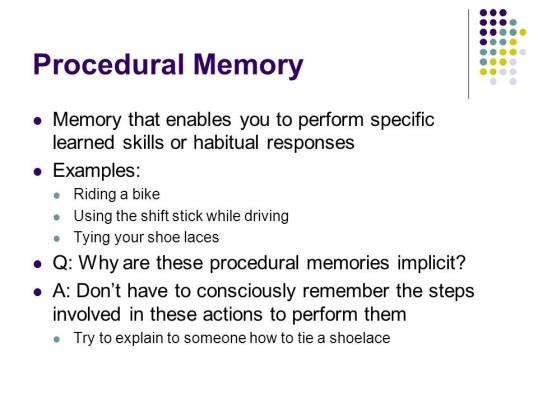

Another example is what is called procedural memory. How many times have you entered a password without being fully conscious of the process? It’s almost as if your fingers know the password themselves. In fact, sometimes people do better by NOT trying to think of the password, but simply “letting their hand do it.” This might seem like an example of philosophical zombieism at work – but performing a repetitive action like this, and doing everything a human being does – those are 2 very different things. And it illustrates, again, that clearly there are different levels of experience. Different sensations, and even actions, get different priorities at any given moment. So where do we draw the line between what constitutes an experience and what does not?

And then there are the effects of various drugs, alcohol for example. Long before a person loses consciousness completely from intoxication, their level of awareness will be affected. Later they may not even remember much of what transpired while they were under the influence. Perceptions will be hazy. The “sharpness” of normal conscious experience will be diminished. So where do we draw the line? How much diminishment is necessary before we say, “You’re not there any more”?

All of these things tell us that conscious experience is a matter of degree. Perceptions and memories are being processed and stored at different levels. Conscious experience is not like a finished stage play. It is more like a constant work in progress, in which spot lights of various intensities are constantly shifting from one part of the stage to another – and the whole play itself is constantly changing. Even the “recording” – the memory of what transpired, will change over time.

So the question becomes, what degree of experience is necessary for a given behavior? Is a philosophical zombie possible? Could a machine really duplicate the full range of human behavior without having full-blown experiences? I doubt it – because human behavior is intimately connected to human experience.

Without memory, there is no experience. Dreaming or waking, perceptions are being recorded, at some level. But a completely unconscious person does not record perceptions in memory. Therefore he has no experiences. A “conscious” person (including a dreaming person) is a remembering person. But perceptions are constantly being filtered, and multiple versions of “the world” are considered. It is not just a matter of what is seen, heard, or felt, but how our minds interpret these sensations. There is no sharp demarcation between “conscious” experience and “subconscious” experience. In a typical dream, for example, there is awareness at some level – but not necessarily awareness of the nature of what is being experienced. The same basic principle applies to waking life. You may perceive a pattern of dots on a screen. Then, suddenly, you realize that it’s an image of a dog.

In many cases, the transition from subconscious to conscious is smoother and more gradual. Your attention may be on a particular object at a particular time. At a subconscious level, your mind is taking in much more. Later, you may be able to retrieve those perceptions into full consciousness. If you are driving in heavy traffic and someone is talking to you, you may hear them, but not REALLY HEAR THEM. There is no clear demarcation between the conscious and the subconscious. It is all a matter of degree. Thus we have expressions like, “You’re not really paying attention to me.”

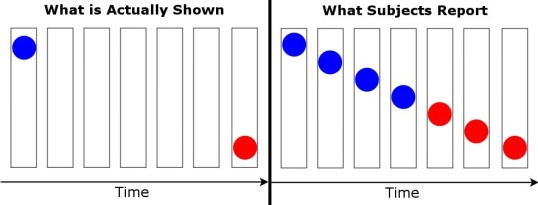

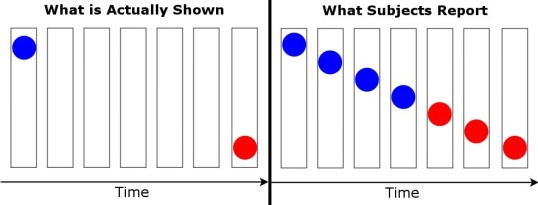

The narrative is not fixed. There is no recording, in the sense that a movie is recorded on disk. It is constantly changing. A good illustration of this is what is call a phi illusion. A colored dot is displayed for moment. Then another dot of a different color is displayed nearby. Typically, a person looking at this will perceive movement between the two. What’s more, the moving dot will appear to change color as it moves. But there is no movement – merely 2 dots being displayed at slightly different times. Yet the human mind creates this experiential narrative that the dot moved from one place to another and changed color in between. The only way it could do this is to perceive the second dot on some level, then create the experience that something occurred (which didn’t occur) before the second dot appeared.

Our minds do this all the time, when we watch movies. Movies, unlike stage plays, consist of single frames displayed in sequence at high speed. There is no movement, but our minds interpret what we see as movement. At each moment, perceptions are being filtered and memories are being recorded. New perceptions can change the narrative. “There is movement going on here,” the mind insists. All of this happens very fast of course. It’s easy for us to believe that there is a continuous stream of experience, as if there is an inner observer watching a play.

Dennett is arguing that conscious experience is not fundamentally different from subconscious experience. It is a matter of degree. Our thoughts, feelings, and actions are affected by both. So does a spider have experiences? Yes, according to this view. Any system that receives and processes information has experiences. Experiences are an inevitable by-product of the processing of sensory inputs. But the richness of the experience depends on the sophistication of the processing system. What we call attention is a heightened level of experience, and this requires a lot of processing power, as well as the right software. The spider’s experiences are presumably not nearly as rich as those of a human.

The difference between a human and something like a spider, according to this view, is the ability to abstract. Our minds interpret our perceptions at a level that is not matched by other species. An object in our field of view becomes much more than that. We assign it to a category, and quickly we build a mental picture of its relationships to other objects. A chair for example. A dog may sit in a chair or a sofa. But there is no evidence that it understands the category “chair” as distinct from “sofa.” There is no evidence that it understands the relationships between chairs, sofas, beds, and tables.

Any system can be abstracted at different levels. The first level is the physical. This is the level of matter, energy, physical relationships. A human being consists of atoms in a specific arrangement. It has mass, volume, and so on. The second level is design. This is the level of biology and engineering – understanding systems in terms of function or purpose. A human being is adapted for consuming food, escaping predators, communicating with other humans, and so on. The third level is intention – understanding systems in terms of goals, beliefs, desires. A human being has desires – the desire for acceptance, for freedom, for dignity, and so on. It is the intentional stance, Dennett believes, that is critical to what we think of as consciousness.

Human beings attribute intention to other human beings, as well as to animals. This allows us to predict their behavior without really understanding the details of what makes them tick. This is what human beings do, and do well, that is so unusual. We abstract at a level beyond that of other species. We look for indications of intention in the world. We find it in other people and animals. And we recognize it in ourselves. Unlike us, animals, while having intention, don’t seem to RECOGNIZE it as such – they just don’t abstract the way we do. Dennett is making the case that this is the essence of consciousness – that any system capable of these things would have what we think of as consciousness.

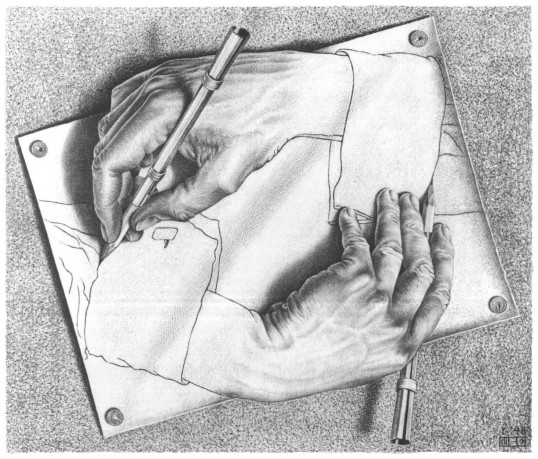

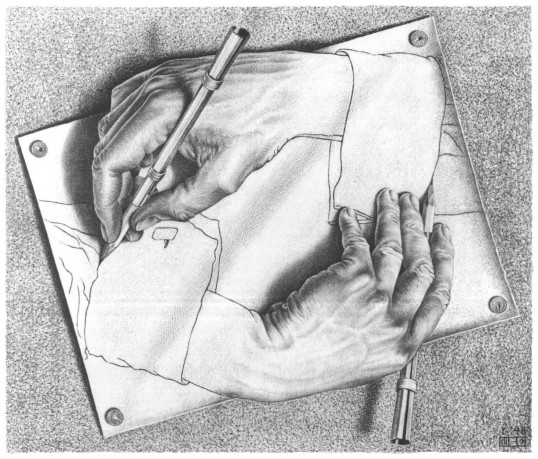

I think there’s a lot to be said for this viewpoint. Dogs almost certainly have experiences. But dogs do not seem to be able to abstract – or if they do, they do so very poorly. We humans have a mental world of categories and relationships. That’s what language is. That’s what mathematics is. Because of this, we are able to make plans, to solve problems, to understand the world in ways that are beyond those of other species. And importantly, we create mental models of ourselves. This, Dennett believes, is what we have in mind when we conjure up the misleading construct of the Cartesian theater. The “self,” the unified “I” that we have in mind, the “one” who is supposed to be watching the show, is just another abstraction, another mental model created by our minds.

It’s a very useful abstraction, of course. In that sense, it’s quite real, just as the concept of the center of gravity is useful, therefore real. The mind creates a mental model of itself, even though it’s constantly changing and there’s no “one” actually watching the show. A beetle cannot recognize itself in a mirror, because it has no mental model of itself. But we do. What we have trouble with is the notion that the modeler and what is being modeled are the same thing.

There is a more subtle question about consciousness, which is this: Is consciousness computable? When computer scientists say something is computable, they mean that it is possible to create, in principle, a set of instructions, a program if you will, that will produce the phenomenon in a finite amount of time. Surprising as it may seem, we know that there are problems that are not computable – they have no method of solution that will complete within a finite time.

For example, a computable number is one for which we can write a computer program that will calculate it to however many decimal places we specify. The number pi, for example, has an infinite number of digits. Yet surprising as it may seem, even pi is computable – in fact, the program is a pretty simple one. For most real numbers, however, this is not possible. They are not computable. This is just one of many examples of non-computable outcomes.

In 1989, physicist Roger Penrose argued that consciousness requires something that can’t possibly be duplicated in a conventional digital computer – quantum coherence. As I have explained in a previous post, quantum mechanics tells us that an isolated system can exist in more than 1 state at the same time – this condition of multiple states is called coherence. Penrose argued that this is critical to consciousness. If so, it introduces fundamental quantum randomness into the process by which consciousness is generated. In essence, Penrose was arguing that the brain, as far as consciousness is concerned, is a quantum computer. If so, then what it does cannot be performed by a conventional digital computer.

Penrose’s position is quite controversial. But notice that he is not arguing anything supernatural for consciousness – only that a system capable of consciousness must incorporate quantum mechanics as a critical element in its operation. A QUANTUM computer, properly designed, would presumably be capable of it.

Meanwhile, conventional digital computers continue to break new ground. From the beginning of the digital age, it was apparent that such supposedly “higher” functions as reason, analysis, problem solving, decision-making, language comprehension, and prediction could be performed by computers. As the decades have passed, more sophisticated forms of cognition, such as heuristics, pattern recognition, and commonsense reasoning, have slowly been conquered, although humans are still much better at these things than any computer. For now.

What we don’t yet know is what is required for a reasoning system to create a mental model of itself, and its relationship to the rest of the world. There is no evidence that a newborn human does this. It clearly requires a lot of mental sophistication, a high level of abstraction. It probably requires the intentional stance – the ability to understand and recognize beliefs and desires.

So where’s does all this leave us? Am I saying there’s no magic left in the universe? No, I’m not saying that. I’m only saying that the apparent mystery of human consciousness is understandable by us. It isn’t “supernatural” in the sense of incomprehensible. But the abstract reality understood and created by our minds is “supernatural” in the sense that it doesn’t exist for rocks, trees, and beetles. For them, our world of science, history, literature, and politics doesn’t exist, because they don’t have the hardware and software to grasp it. What we do, from their point of view, is magic. And by the same token, there may be beings who we might consider “supernatural,” because they operate at an even higher level of abstraction than we do – even though for them, none of it is supernatural. They may even move through our world freely, undetected by us, just as an ant fails to understand the difference between a potato and a potato chip. We humans seem to have occasional glimpses of what we call the divine. Are we deluding ourselves? Maybe. Maybe not.

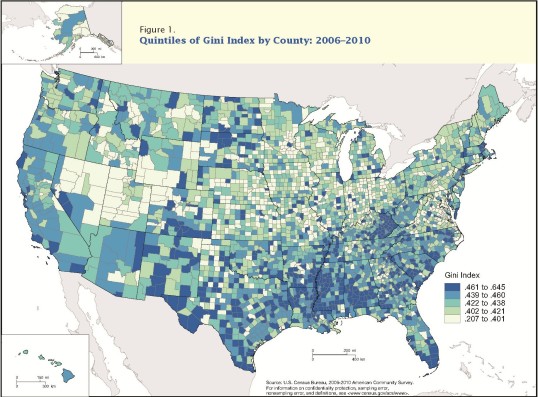

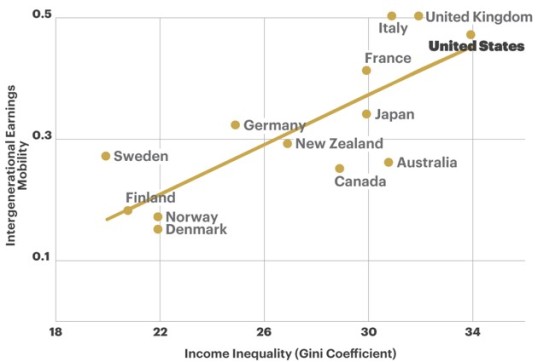

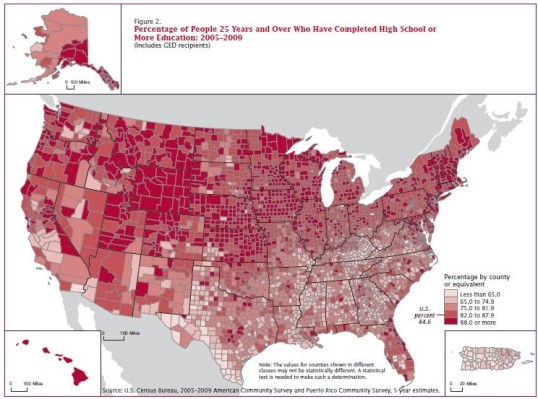

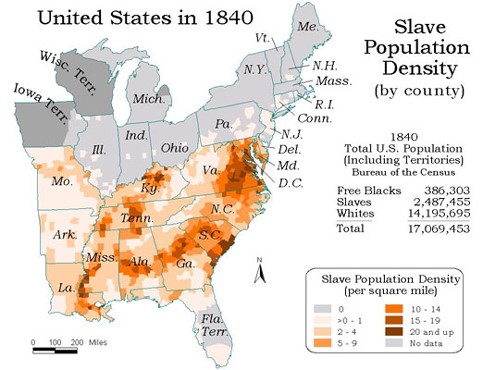

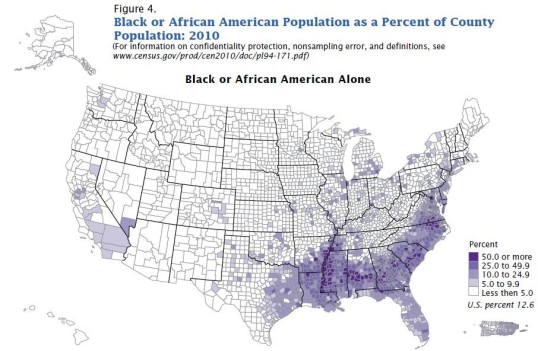

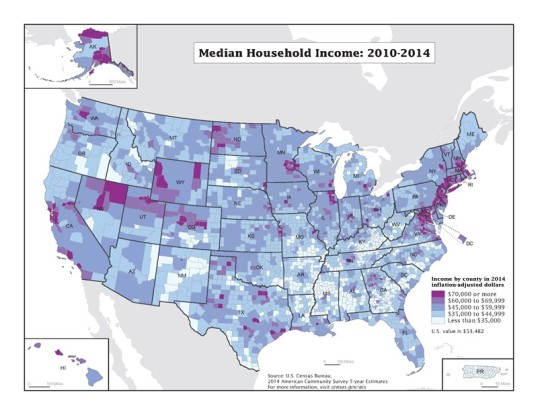

Yet again, the South is characterized by low incomes compared to the Northeast and Midwest. Rural areas of the South, even white-dominated rural areas, tend to be especially destitute. And we can look at income inequality:

Yet again, the South is characterized by low incomes compared to the Northeast and Midwest. Rural areas of the South, even white-dominated rural areas, tend to be especially destitute. And we can look at income inequality: